Ollama-Powered Qwen Code: Privacy-First AI Coding

Qwen Code: Your Local AI Coding Assistant - A Powerful Alternative to Claude Code

The world of AI-powered coding assistants is rapidly evolving, and while Anthropic’s Claude Code has been making waves in the developer community, there’s an exciting open-source alternative that deserves your attention: Qwen Code. This command-line AI workflow tool brings the power of advanced coding assistance directly to your local development environment.

What is Qwen Code?

Qwen Code is a command-line AI coding agent developed by the Qwen team at Alibaba Cloud. Built as an adaptation of the Gemini CLI framework, it’s specifically optimized for Qwen3-Coder models and offers enhanced parser support and comprehensive tool integration. Like Claude Code, Qwen Code is designed to understand, edit, and automate work across large codebases, making it an invaluable companion for modern software development.

The tool leverages the powerful Qwen3-Coder models, with the flagship Qwen3-Coder-480B-A35B-Instruct being a 480-billion parameter Mixture-of-Experts model that supports up to 256K tokens natively and can be extended to 1M tokens. This massive context window allows for unprecedented understanding of large codebases and complex programming tasks.

Key Features and Capabilities

Code Understanding & Editing

Qwen Code excels at querying and editing large codebases that go beyond traditional token limits. It can analyze complex project structures, understand dependencies, and make intelligent modifications across multiple files.

Workflow Automation

The tool can automate various development workflows including:

- Handling pull requests (PRs)

- Complex variable base operations

- Generating JSDoc comments

- Creating unit tests

- Producing API documentation

Multi-Language Support

Qwen3-Coder models demonstrate excellent performance across more than 40 programming languages, with particularly impressive results in languages like Haskell, Racket, and Python. The latest Qwen2.5-Coder-32B scores 65.9 on McEval, showcasing its broad language capabilities.

Setting Up Qwen Code with Ollama

One of the most appealing aspects of Qwen Code is its ability to run locally using Ollama, giving you complete control over your development environment and ensuring your code never leaves your machine.

Prerequisites

- Ollama installed on your system

- Node.js and npm for the Qwen Code CLI

- Sufficient system resources (the larger models benefit from more RAM and GPU memory)

Installation Steps

- Install Ollama Model

1 2 3 4 5

# Pull a Qwen3 model (adjust size based on your system capabilities) ollama pull qwen3:30b-a3b # Or try other variants like: # ollama pull qwen2.5-coder:32b # ollama pull qwen2.5-coder:7b

- Install Qwen Code CLI

1

npm install -g qwen-code

- Configure API Endpoint

Set up Qwen Code to use your local Ollama instance running on

localhost:11434/api/v1.

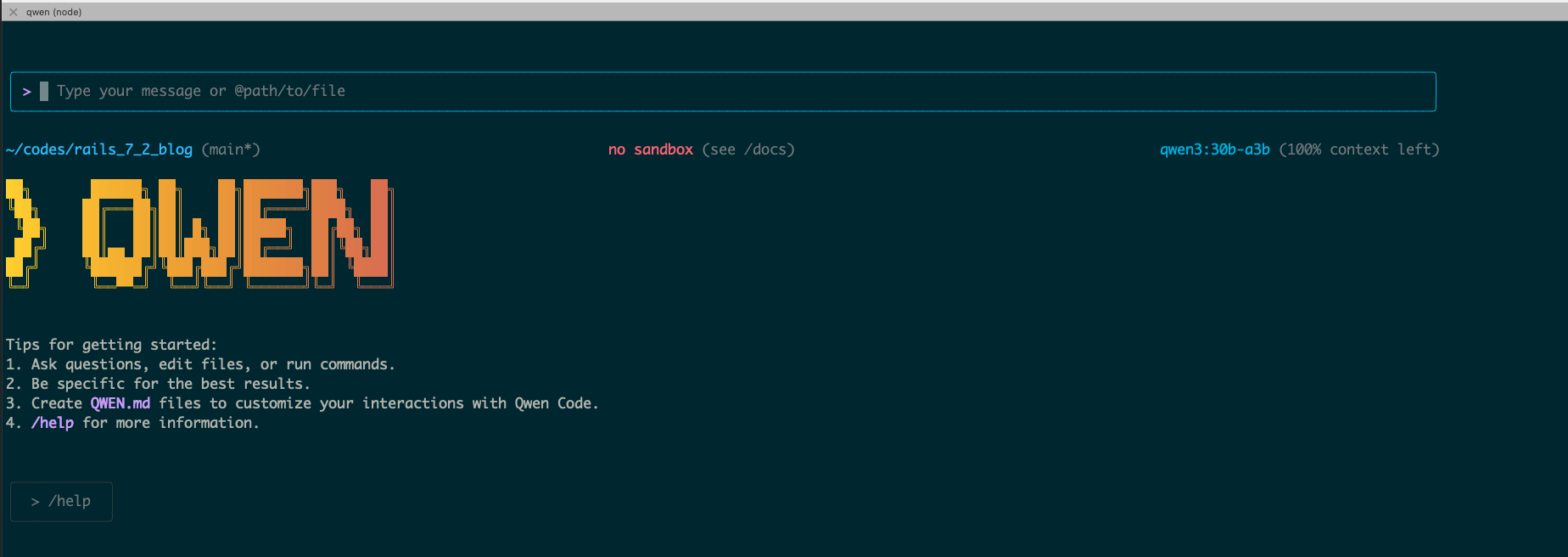

Running Qwen Code

The setup requires two terminal sessions:

Terminal 1 - Start Ollama Server:

1

ollama serve

Terminal 2 - Run Qwen Code:

1

2

qwen --help # View all available options and commands

qwen # Start interactive session

Qwen Code vs Claude Code: Key Differences

While both tools serve similar purposes as AI coding assistants, there are several important distinctions:

Deployment Model

- Qwen Code: Runs entirely locally with Ollama, ensuring complete privacy and no dependency on external APIs

- Claude Code: Connects to Anthropic’s cloud-based Claude models

Cost Structure

- Qwen Code: Free to use once set up locally (only hardware costs)

- Claude Code: Requires API credits and usage-based pricing

Customization

- Qwen Code: Open-source nature allows for extensive customization and fine-tuning

- Claude Code: Limited customization options as a proprietary tool

Performance Characteristics

Both tools may issue multiple API calls per cycle, resulting in higher token usage for complex tasks. However, Qwen Code’s local execution means you’re only limited by your hardware rather than API rate limits.

Best Practices and Tips

Model Selection

Choose your Qwen model based on your system capabilities:

- qwen3:7b - Good for basic coding tasks on lower-end hardware

- qwen3:30b-a3b - Balanced performance for most development tasks

- qwen2.5-coder:32b - Excellent coding performance with strong multi-language support

System Requirements

For optimal performance, consider:

- GPU: 24GB+ VRAM for larger models

- RAM: 128-256GB for the largest models

- Context Length: Use 65,536 tokens as recommended (can be increased based on needs)

Configuration Tips

- Set appropriate temperature settings (0.6-0.7 for coding tasks)

- Use TopP=0.8-0.95 depending on your model

- Avoid greedy decoding to prevent performance degradation

Getting Started

To begin using Qwen Code effectively:

- Start with smaller models to test your setup

- Experiment with different prompting strategies

- Leverage the tool’s ability to understand large codebases

- Use it for automated documentation and testing

- Explore workflow automation features for repetitive tasks

The Future of Local AI Coding

Qwen Code represents a significant step toward democratizing AI-powered development tools. By offering a powerful, local alternative to cloud-based solutions, it addresses key concerns around privacy, cost, and dependency on external services.

The open-source nature of the Qwen ecosystem also means rapid innovation and community contributions, potentially leading to specialized variants optimized for specific programming languages or development workflows.

Conclusion

While Claude Code has pioneered the command-line AI coding assistant space, Qwen Code offers a compelling alternative that brings similar capabilities to your local environment. With its powerful models, extensive language support, and cost-effective local deployment, it’s worth exploring for developers who value privacy, customization, and independence from cloud services.

Whether you’re working on personal projects or enterprise applications, Qwen Code provides a robust foundation for AI-assisted development that grows with your needs. Try it out with Ollama today and experience the future of local AI coding assistance.

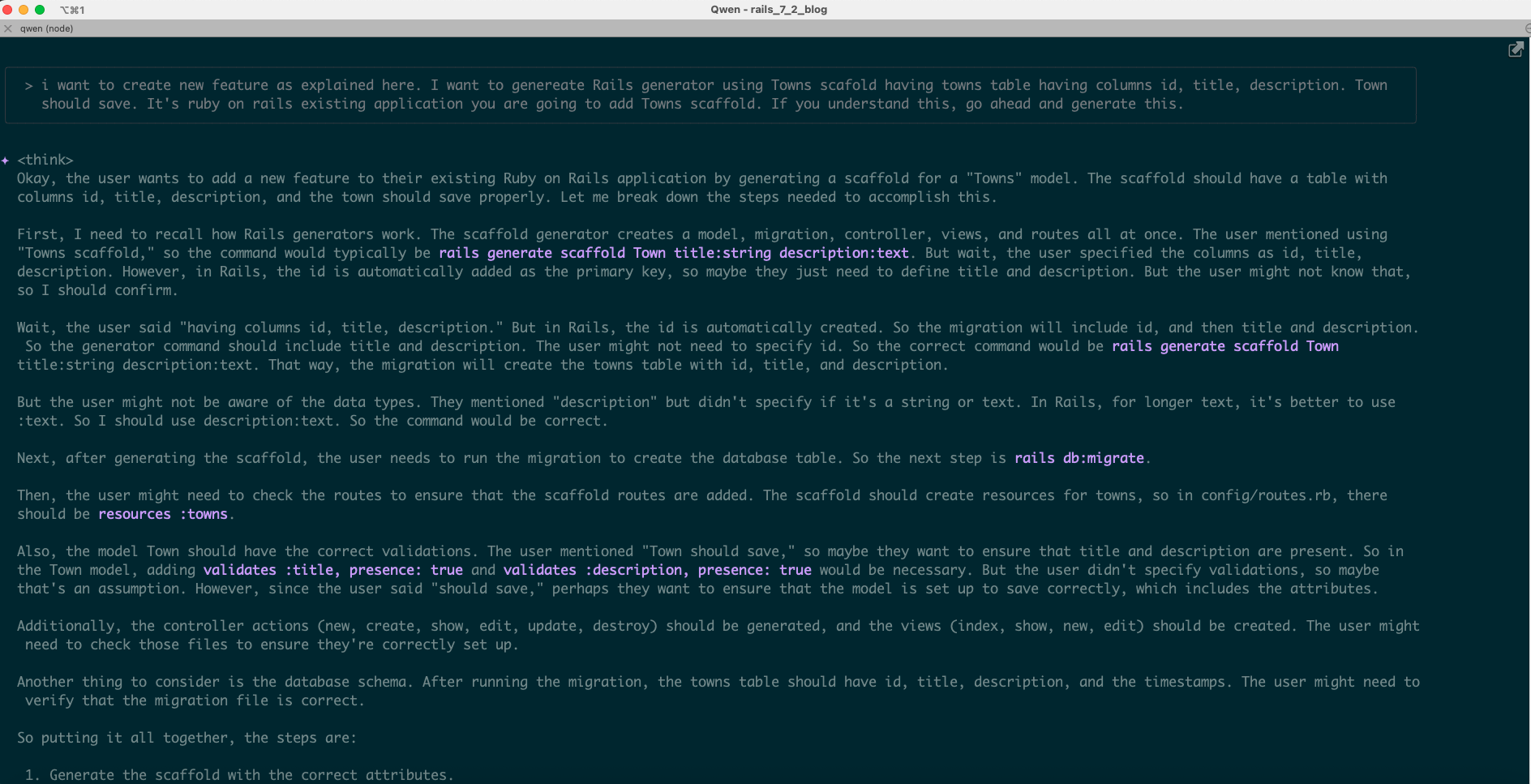

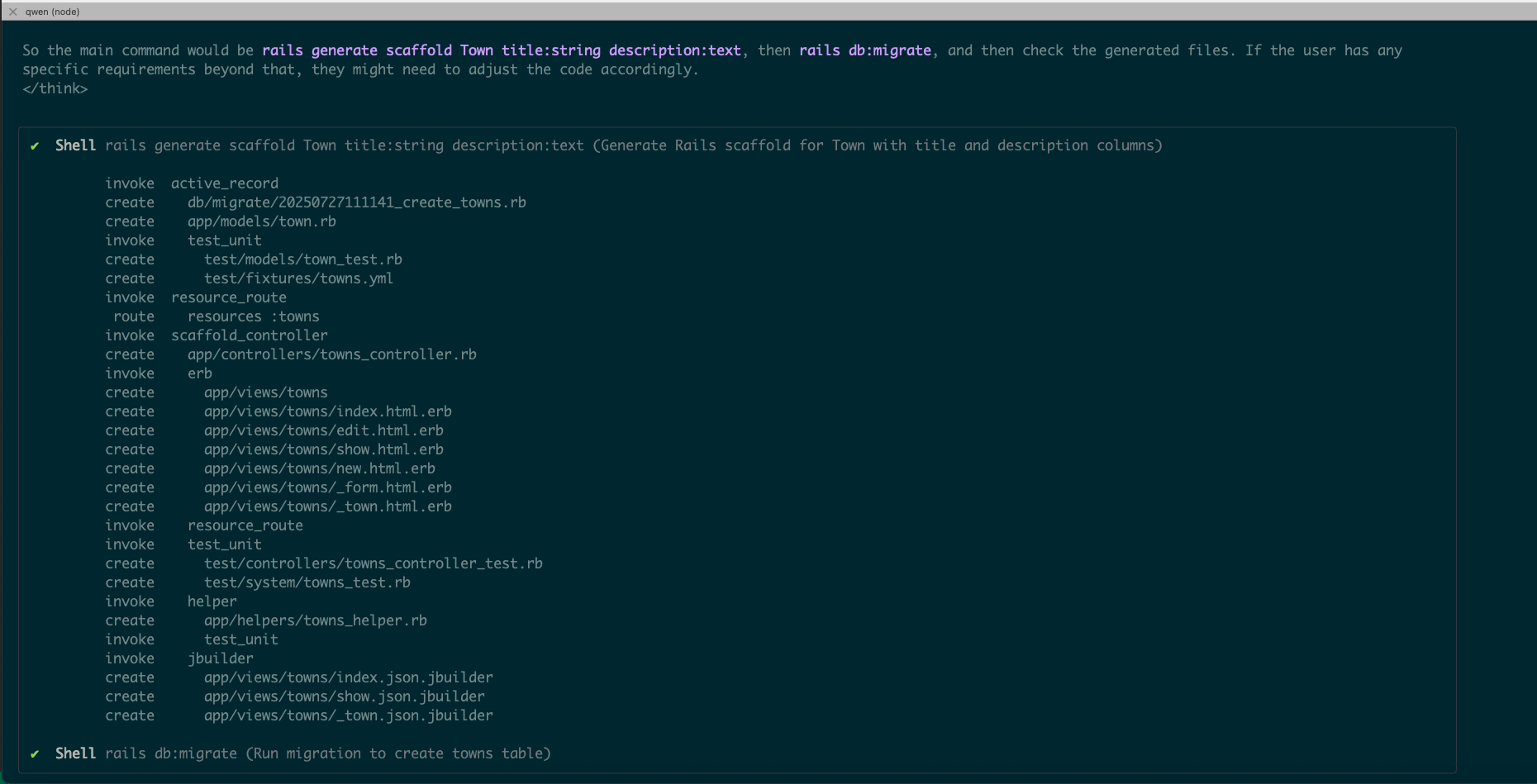

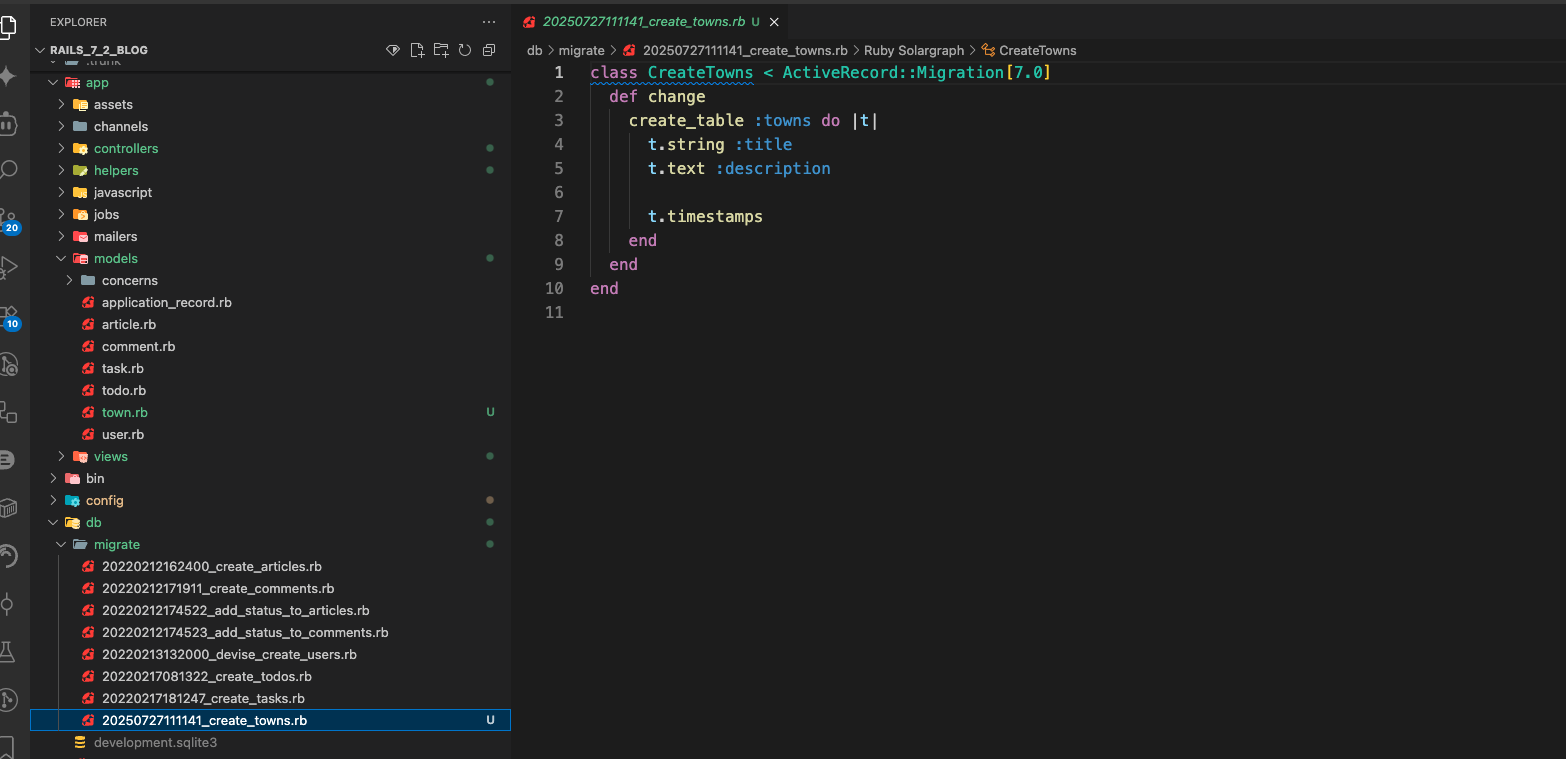

Qwen-Code in Action: A Visual Walkthrough

To demonstrate Qwen-Code’s capabilities in practice, here’s a step-by-step visual guide showing the feature: